Thoughts, notes and quotes from the Online Information 2008 Seminars: Part 4

This is the last of four posts gathering together my thoughts, notes and the top quotes from this year's Online Information seminars at Olympia. Today I'm posting about what I saw there, mostly around the topic of content publishing, from the 14th century to the 21st...

"Turning the pages - Bringing the world's most treasured books to life through technology" by Barry Smith

This presentation from the British Library was a case study into their online digitisation project "Turning the pages". For many of their rarest treasures, in order to see them, you have to come to London, and then get to look at just one page in a glass case. They wanted to provide a virtual book system that truly replicated the experience of having a book on your desk.

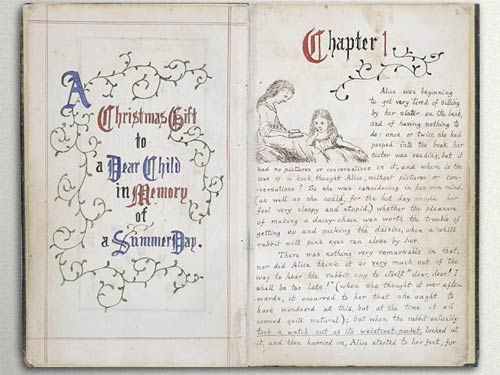

Barry Smith was a very engaging speaker, and his real enjoyment of playing with these virtual objects showed through. He demonstrated the system with a 14th century illuminated copy of the Qu'ran, making the point that the light reflections on the gold leaf change as you tilt the 'book' in your on-screen application. He also showed off the handwritten original manuscript of Lewis Carroll's "Alice in Wonderland", which has been put through the system. You can zoom in on the original hand-drawn illustrations, and also listen to the book being read aloud to you.

Two future developments of the system were demonstrated.

One is that they have licensed the software to other libraries, and so shortly you'll get the same experience with some specialist material about the history of Newcastle. The second was that the system has been adapted to be able to represent flat objects like maps, and 3-dimensional items from the British Library collection. Smith showed a virtual gold astrolabe.

One big black mark for the Library however.

Some of the most impressive features, like the realistic lighting effect, are only available to users of Windows XP and Vista. The rest of us have to make do with a 'lite' version. It seems a real shame that, despite their aim of freeing up the use of these books to any one with a web connection, they have hitched it to conditional use of a proprietary operating system.

Google Knol

I didn't do much glad-handing around the stands. In truth, a lot of the software on display for managing information looks like the user interface was designed by programmers for the Windows 3.1 version in the early 90s, and hasn't been updated since. I did notice, though, that Escenic, one of the key CMS systems for a variety of European newspaper sites, had a massive workflow on display that included 'Citizen Journalism'. I sure the real journalists were thrilled about that.

In contrast to their usual heavy presence at the kind of trade shows I go to, Google had a small one-man stand at Online Information, showcasing their 'Knol' project.

I had a chat on the stand, and I was interested to discuss what several people have commented on, that 'Knol' is all about applying attribution to knowledge, yet the recently launched 'SearchWiki' interface allows anyone to comment on any search result posing as anyone.

The reply, that with 'SearchWiki' it is all about lowering barriers to participation, doesn't really cut it for me.

At the moment there is nothing to stop anyone leaving comments on Google search listings about Russell Brand pretending to be Russell Brand, which I think is potentially going to become a big problem. It seems quite strange to be trying to offer a 'verifiable wikipedia' on the one hand, and enabling anonymous search graffiti on the other.

"Automated Content Access Protocol: Renewing respect for copyright on the Internet" by Mark Bide

I've been a very forthright critic of ACAP on this blog, so thought it well worth getting along to project director Mark Bide's session on the topic. Mark stated that "very few publishers have absolutely NO concerns about how their content is re-used", and he is quite right. I myself have wrestled with Google and the DMCA take-down process to shut down some blogs scraping my content from currybetdotnet. Whether ACAP is the right method to manage that issue remains to be seen, but there were certainly some interesting facts and figures in the presentation.

One key premise was that publishers specify terms and conditions of use on their website, which are generally only of interest to the person who wrote them. Certainly most people pay no heed to them, or understand the legalese, and machines face an even more hopeless task to interpret the meaning of these documents. ACAP aims to simplify that.

Over 700 sites are now ACAP enabled, and the kind of thing that the publishing industry is hoping to avoid is the wholesale aggregation of content, and its subsequent monetisation, without the original publisher getting a share. Some of their forensic web crawling research shows that within 24 hours of a news story being published, it can generally be found on at least 20 sites, of which 75% are monetising the content for themselves.

Mark Bide was keen to stress that ACAP was "a business-led not a technically-led agenda", and that the standard was not trying to dictate how machine-to-machine communication worked, but to specify what semantically they should be saying to each other about content use. He argued that robots.txt was not fit for the purpose of managing content on the network. It had been designed in 1993 with more of an eye on managing bandwidth scarcity, and had changed little since then.

They are now seeing that about 50% of implementations of ACAP are happening with publishers in the US, which marks a shift, as, he said, originally the project was seen a bit as European Luddites attempting to hold back American digital progress.

By far the biggest problem for the proposal is that up to this point the major search engines have been willing to join in talks and give advice, but nobody has implemented the system. The tag line on the literature handed out was:

"Implement today for free and take control of your digital destiny. Nothing to lose; everything to gain"

Nothing to lose maybe, but, unless there are some real practical gains soon, it will be hard to keep any momentum for the system up.

Bide predicted that there would be greater success in the b2b sphere, and I did think that there was good potential for ACAP to be used as a communication protocol between some of the systems on display at this type of trade show. This looked to me to be more like a promising line of future development. He said that in this arena "there should be no need of enforcement. We believe people will be compliant with the requirements of rights holders as they are now". I'm still very skeptical whether that will ever be true of the wider Internet ecosphere.

And finally...

As ever there were a couple of sessions at the show which I missed because they just proved too popular, and even though everybody's barcoded ID badge is scanned on the way into a seminar so they know who attended, there was no way of booking a seat in advance. I must, one of these days, try and get 'press accredited' at this sort of event, and see if I can invoke 'higher powers' to get into crammed sessions and squiggle my way down to the front. I was particularly sore at missing the Mike Ellis session on "What does Web 2.0 actually do for us?", and I also didn't get to Phil Bradley's "30 Web 2.0 resources in 30 minutes - revisited" talk.

It was a good show, if, in these times of recession, a little smaller than previous years. I very much enjoyed speaking at the FreePint stall, and I've a good hunch that I'll be there again in 2009...